Presentation Draft 3

Speaker 0

“Hi everybody, we’re team 2D|!2D (maybe draw it on the board for good measure) and we’re here to present on texture mapping and filtering; specifically bilinear and trilinear interpolation, mip-maps, and anisotropic filtering. To start with, we have speaker 1 to give a quick breakdown of texture mapping before we move into more specific use cases

Speaker 1

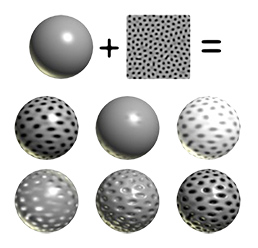

Texture mapping, on a basic level, is a way to calculate color, shade, light, etc. for each pixel we by pasting images to our objects in order to create the illusion of realism. This allows us to have much more detailed graphics without having to sacrifice computation time. Something key to understand here is that we are mapping each point on the original texture (or texture coordinate system) to a point on the new image we’re trying to create. A good example of this would be if we’re trying to display a texture somewhere where the lighting in the environment rarely or never changes, we can precompute the texture so we don’t have to calculate individual bit values. Now, here’s Jason, to talk about linear interpolation and how it’s useful in texture filtering.

Jason

Interpolation is the estimation of a value based on other “nearby” values. It’s extremely useful in computer graphics because we can use it to choose how to both color and texturize a system of texels (we’ll get to that later) based on either a formula or a smaller resolution original map.

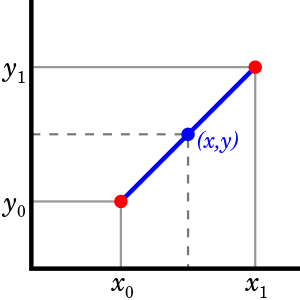

To start with a simple example 1D interpolation is the estimation of a value between two points based on the value of those two points and the distance from the third point and each of the other points. If the new point is closer to one point than the other, its value will be closer to that point than the other's, and vice versa.

1D interpolation

For instance, let's assume that the dot at the bottom left represents the number 10, and the dot at the top right represents the number 100. Every point on the blue line is a transition from 10 to 100. The (x,y) value plotted looks roughly halfway between the two, so we could fairly easily say its around 55. This is the idea behind interpolation.

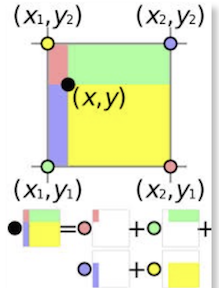

To explain bilinear interpolation, let’s draw an example on the board. Say you have a pixel map and you want to increase the resolution of it, or “zoom in”, so to speak.

To do this, we can define any new point we want to create based on the points around it. This new point is somewhere in between the 4 nearest pixels. (If we're just trying to double the resolution, it would be dead-center, and pretty easy to calculate.

Here’s an equation to calculate , where u and v are dimensions to be mapped.

where

cij - top left

c(i+1)j - top right

ci(j+1) - bottom left

c(i+1)(j+1) - bottom right

u’ = unx - floor(unx) = the decimal portion of unx

v’ = vnx - floor(vnx) = the decimal portion of vnx

c(u, v) = (1−u’ )(1−v’ )cij+u’(1−v’ )c(i+1)j+(1−u’)v’ ci(j+1)+u’v’c(i+1)(j+1)

2D interpolation

3D linear interpolation c(u, v, w) = (1 − u’ )(1 − v’ )(1 − w’ )cijk+u’ (1 − v’ )(1 − w’ )c(i+1)jk + . . .

Leslie

MIP (latin: “multum in parvo” aka many things in a small place) Maps! (or mipmaps)

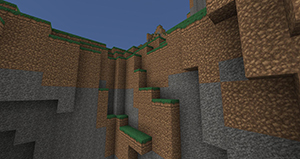

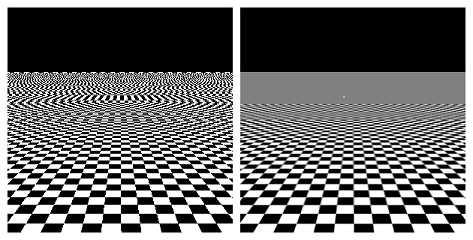

What’s the problem with bilinear filtering? Recall that bilinear filtering rounds to the closest matching location in the source pixel grid for each destination pixel location, then it interpolates between 4 nearby pixel values. While this creates a smoother (but blurrier) aliasing effect, scaling an image to less than half its size results in some source pixels being skipped. (maybe talk about what happens when image is scaled to a single pixel - what color should the pixel be? Plus averaging all the colors is too expensive!) This creates noisier aliasing. So what method can we use to create smoother texture maps? We want image resolutions that are not too high due to aliasing effects but not too low due to a loss of detail. So what about images of varying resolutions depending on the distance between the object and viewpoint?

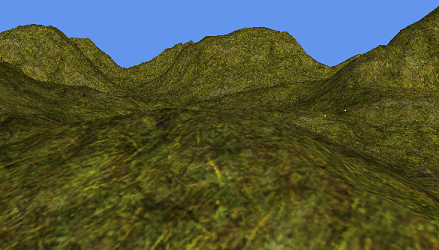

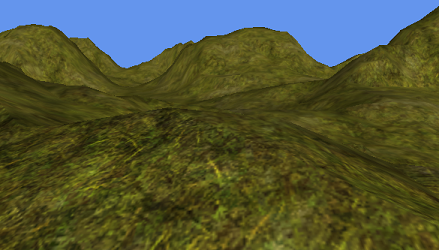

Let’s talk about mipmaps! A mipmap is a texture image that is shrunken down to a progressively smaller resolution. These images form a sequence of textures that help create smoother antialiasing and the illusions of depth and perspective in 2D texture mapping (see example images below). These images are precalculated, meaning the filtered detail is done before the image is fully rendered, removing artifacts and stray aliasing.

No mipmapping - noisy aliasing

with mipmapping - smoother aliasing

Each image in the mipmap is half the size of the previous image, thus decreasing by a power of 2. For example (talk about images):

- Original = 256x256

- Mip 1 = 128x128

- Mip 2 = 64x64

- Mip 3 = 32x32

- Mip 4 = 16x16

- Mip 5 = 8x8

- Mip 6 = 4x4

- Mip 7 = 2x2

- Mip 8 = 1x1

Images: mipmapping can contribute to creating spatial aliasing, as seen in these checkered images

Besides creating smoother anti-aliased images, what are some not-so-obvious perks of mipmaps? The first is that mipmaps do not take up much additional memory, in comparison to bilinear filtering, for instance. Mipmaps take up only ⅓ additional memory space due to the power of powers (¼ original size + 1/16 original size + 1/64 original size + … + 1/(4^n) = ⅓). Rendering times are also decreased because mipmap levels are easily cached instead of going back to main memory to render most destination pixels.

Sahar

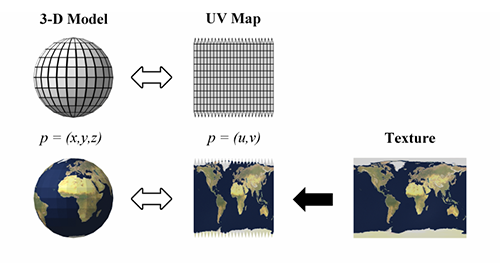

As already mentioned, 2D Texture mapping is a technique where a 2D image is wrapped (or “mapped”) onto a 3D model. The 2D texture mapping can be mapped onto the surface of a simple 3D geometric shape, such as a plane, cube, cylinder, or sphere, or it can be mapped onto a more generalized curve or surface such as a NURB which we will discuss later. But that’s only the first step to the process! A 2D texture indeed becomes mapped onto the surface of a defined shape in our first step, but there are some things to consider when we are talking about a polygonal object, and that leads to the second step which involves a 3D texture (and that 3D texture is actually created in the process described in the first step when the 2D texture is mapped to the 3D shape’s surface) to then be mapped onto the surface of an arbitrary shaped object [Reference1], which can be our 3D model, for example. This is known as a two-part mapping method [Reference1].

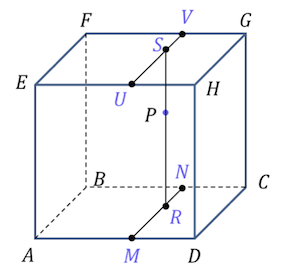

The above image is an example of this two-part mapping method, and how 2D texture mapping takes place onto a 3D model. These texture mapping images can be used to manipulate a variety of characteristics on a model’s surface like the displacement, bumpiness, transparency, color, and more. But let’s take it a step further. How do we actually go between a 2D image to a 3D object in our computer graphic space?

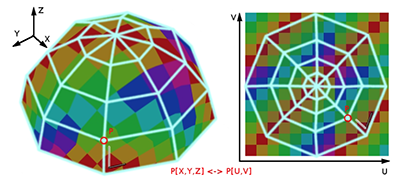

Well, in order to apply texture mapping to an object, we must implement texture mapping coordinates, and this is where UV mapping comes into play. Texture mapping coordinates in a texture space are represented as (U, V). For the above example, we talked about a polygonal object and how UV mapping is implemented in a two-part mapping method. But for a NURBS surface, it is much different. A NURBS surface stands for non-uniform rational B-spline which is a mathematical model that is used to represent various surfaces and curbs. For this type of surface, it is essentially a rectangular patch with UV coordinates so naturally its own UV coordinates are utilized for the texture mapping coordinates, but for polygonal objects, two-part mapping techniques need to be conducted because the number of vertices that a polygon has are arbitrary and each vertex needs to have its own texture mapping coordinates to be created.

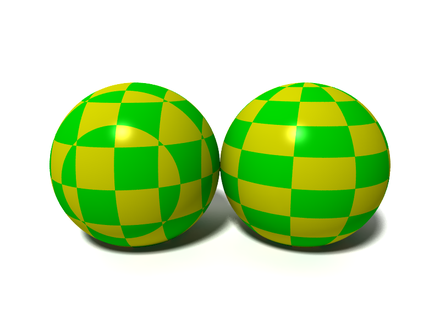

However, in instances for 3D modeling, a lot of thought has to come into play when accurately texture mapping from the 2D image to the 3D model. We know we utilize UV mapping to project a texture map onto a 3D object, but how does this work? The letters “U” and “V” actually denote the axes of the 2D texture as “X” and “Y”. “Z” comes into play when denoting the axes we are referring to the graphical model of the object in a 3D modeling space [Reference2].

Above is an image of an object that has two checked sphere objects. The image on the left does not have UV mapping applied to it and the image on the right does have UV mapping applied. This is an example of how UV mapping is important because it allows us to apply more details to our texture maps rather than just using the built in rendering system to apply procedural materials and textures as they can not always create that high level of realism that we are looking for when we try to create our finalized product.

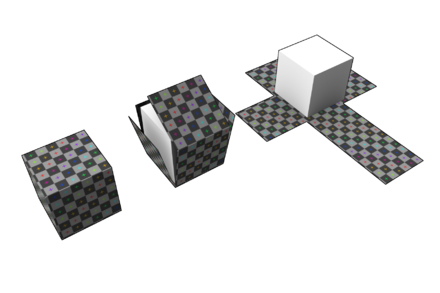

How does one accomplish this task though of creating the texture mapping coordinates? How do we go from taking the normals of the “X” and “Y” and creating the texture mapping coordinates “U” and “V” and map it again to an object in an “X”, “Y”, and “Z” in an 3D space?

Well, the easiest way to think of this is looking at the above image of the box. If you were to cut the seams of the box and lay it out flat, you would be able to see the image in 2D dimensions where the “U” direction is the horizontal direction and “V” is the vertical direction and these are known as our texture mapping coordinates and when the box is put back together again, each UV location on the texture is transferred to an XYZ location on the box [Reference 2]. 3D modelers, engineers, or other professions that utilize texture mapping normally use a modeling package that includes a UV Image Editor in which you can designate what seams or edges you would like to cut or sew together and this occurs in the UV unwrapping process.

Here is an example of what the 3D object may look like in a UV space. As you can see, the overall size and shape of the marked faces are looking quite different in each space and each UV mapping is applied per face, not per vertex. This means a shared vertex can have different UV coordinates in each of its triangles, so adjacent triangles can be cut apart and positioned on different areas of the texture map.

In conclusion, texture mapping is an intricate process in which a 2D image is wrapped onto a 3D model and the UV Mapping process at its simplest requires three steps: unwrapping the mesh, creating the texture, and applying the texture. UV Mapping plays a huge deal in helping artists in their different fields and professions apply these unique texture maps to a 3D model by implementing a process of cutting and sewing the normals of an 3D object, converting them to UV coordinates, and wrapping the new texture map to the model in the 3D space which can be customized further by a series of photo-image editing or other techniques that can further enhance the new model.

Justin

Hi guys, so you have already gotten the gist of filtering so far. But, I’m going to further that knowledge by talking about anisotropic filtering. The difference between anisotropic filtering from bilinear and trilinear filtering is in its name. Bilinear and trilinear filtering are isotropic, meaning that the filtering works the same in all directions. However, it is not always the case that we want filtering to work this way. For example, in many modern games the player usually views things that eventually fade out in the distance. If a game were to use bilinear or trilinear filtering methods to generate the graphics for the game, the image will end up looking blurry. Here is an image of just the case. To fix this the game requires a non-isotropic filtering pattern. Hence, we use anisotropic filtering. How it works is that it takes in textures and surfaces that are far away or at high angles and projects the textures as trapezoids or rectangles over squares. This process works on a base 2 level system: 2x, 4x, 8x, 16x. Anisotropic filtering can usually go up to 16x without harshly affecting the performance. Of course, if we had infinite processing power then textures using this filtering can be generated at much higher levels.

Will add more...